School closures in all 50 states have sent educators and parents alike scrambling to find online learning resources to keep kids busy and productive at home. Website traffic to the homepage for IXL, a popular tool that lets students practice skills across five subjects through online quizzes, spiked in March. Same for Matific, which gives students math practice tailored to their skill level, and Edgenuity, which develops online courses.

All three of these companies try to hook prospective users with claims on their websites about their products’ effectiveness. Matific boasts that its game-based activities are “proven to help increase results by 34 percent.” IXL says its program is “proven effective” and that research “has shown over and over that IXL produces real results.” Edgenuity boasts that the first case study in its long list of “success stories” shows how 10th grade students using its program “demonstrated more than an eightfold increase in pass rates on state math tests.”

These descriptions of education technology research may comfort educators and parents looking for ways to mitigate the devastating effects of lost learning time because of the coronavirus. But they are all misleading.

None of the studies behind IXL’s or Matific’s research claims were designed well enough to offer reliable evidence of their products’ effectiveness, according to a team of researchers at Johns Hopkins University who catalog effective educational programs. And Edgenuity’s boast takes credit for substantial test score gains that preceded the use of its online classes.

A Hechinger Report review found dozens of companies promoting their products’ effectiveness on their websites, in email pitches and in vendor brochures with little or shoddy evidence to support their claims.

Misleading research claims are increasingly common in the world of ed tech. In 2002, federal education law began requiring schools to spend federal dollars on research-based products only. As more schools went online and demand for education software grew, more companies began designing and commissioning their own studies about their products. But with little accountability to make sure companies conduct quality research and describe it accurately, they’ve been free to push the limits as they try to hook principals and administrators.

This problem has only been exacerbated by the coronavirus, as widespread school closures have forced districts to turn to online learning. Many educators have been making quick decisions about what products to lean on as they try to provide remote learning options for students during school closures.

A Hechinger Report review found dozens of companies promoting their products’ effectiveness on their websites, in email pitches and in vendor brochures with little or shoddy evidence to support their claims. Some companies are trying to gain a foothold in a crowded market. Others sell some of the most widely used education software in schools today.

Many companies claim that their products have “dramatic” and “proven” results. In some cases, they tout student growth that their own studies admit is not statistically significant. Others claim their studies found effects that independent evaluators say didn’t exist. Sometimes these companies make hyperbolic claims of effectiveness based on a kernel of truth from one study, even though the results haven’t been reproduced consistently.

The Matific study that found a 34 percent increase in student achievement, for instance, includes a major caveat: “It is not possible to claim whether or how much the use of Matific influenced this outcome as students would be expected to show some growth when exposed to teaching, regardless of what resources are used.”

IXL’s research simply compares state test scores in schools where more than 70 percent of students use their program with state test scores in other schools. This analysis ignores other initiatives happening in those schools and the characteristics of the teachers and students that might influence performance.

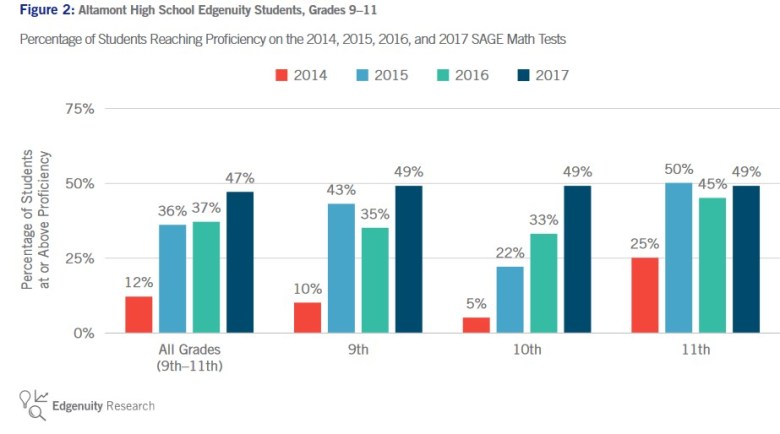

And Edgenuity’s boast of contributing to an eightfold increase in state math test pass rates for 10th graders at Altamont High School in Utah is based on measuring growth starting two years before the school introduced Edgenuity rather than just one year before. Over two years of actually using Edgenuity, 11th grade pass rates dropped in the featured school, ninth-grade pass rates fell and then recovered and 10th grade pass rates doubled — a significantly less impressive achievement than the one the company highlights.

Matific did not respond to repeated requests for comment. IXL declined to comment on critiques that its studies weren’t adequately designed to make conclusions about the impact of its program on student test scores. And Edgenuity agreed that it shouldn’t have calculated student growth the way it did, and said it would edit its case study, though at the time of publication the misleading data still topped its list of “success stories.”

When shoddy ed tech research leads educators to believe programs might really help their students, there are consequences for schools as well as taxpayers. Districts spent more than $12 billion on ed tech in 2019.

In some places, principals and administrators consider themselves well-equipped to assess research claims, ignore the bunk and choose promising products. But many people making the decisions are not trained in statistics or rigorous study design. They don’t have the skills to assess whether promising findings with one group of students may realistically translate to their own buildings. And, perhaps most importantly, they often don’t have the time or resources to conduct follow-up studies in their own classrooms to assess whether the products paid for with public dollars actually worked.

“We’re spending a ton of money,” said Kathryn Stack, who spent 27 years at the White House Office of Management and Budget and helped design grant programs that award funding based on evidence of effectiveness. “There is a private-sector motive to market and falsely advertise benefits of technology, and it’s really critical that we have better information to make decisions on what our technology investments are.”

Related: Impact funds pour money into ed tech businesses

In 2006, Jefferson County Public Schools, a large Kentucky district that includes the city of Louisville, began using SuccessMaker, a Pearson product designed to supplement reading and math instruction in kindergarten through eighth grade. Between 2009 and 2014, records provided by the district show it spent about $4.7 million on new licenses and maintenance for SuccessMaker. (It was unable to provide records about its earlier purchases.)

Typically within JCPS, school principals get to pick the curriculum materials used in their buildings, but sometimes purchases happen at the district level if administrators find a promising product for the entire system.

SuccessMaker, which at the time boasted on its webpage that it was “proven to work” and designed on “strong bases of both underlying and effectiveness research,” never lived up to that promise. In 2014, the district’s program evaluation department published a study on the reading program’s impact on student learning, as measured by standardized tests. The results were stark.

“When examining the data, there is a clear indication that SuccessMaker Reading is not improving student growth scores for students,” the evaluation said. “In fact, in most cases, there is a statistically significant negative impact when SuccessMaker Reading students are compared to the control group.”

The district stopped buying new licenses for the education software — but only after it had spent millions on a product that didn’t help student learning.

That same school year, Pearson paid for a study of SuccessMaker Reading in kindergarten and first grade. The company said the study found positive effects that were statistically significant, a claim it continues to make on its website, along with this summary of the program: “SuccessMaker has over 50 years of measurable, statistically significant results. No other digital intervention program compares.”

An independent evaluator disagrees.

Robert Slavin, who directs the Center for Research and Reform in Education at Johns Hopkins University, started Evidence for ESSA in 2016 to help schools decide if companies meet the evidence standards laid out in the 2015 federal education law, the Every Student Succeeds Act. The law sets guidelines for three levels of evidence that qualify products to be purchased with federal money: strong, moderate and promising. But companies get to decide for themselves which label best describes their studies.

“Obviously, companies are very eager to have their products be recognized as meeting ‘ESSA Strong,’ ” Slavin said, adding that his group is trying to fill a role he hopes the government will ultimately take on. “We’re doing this because if we weren’t, nobody would be doing it.”

Slavin’s organization tries to fill the gap by offering an independent assessment of the research that companies offer up for their products.

When Slavin’s team reviewed SuccessMaker research, they found that well-designed studies of the education software found no significant positive outcomes.

Slavin said Pearson contested the Evidence for ESSA determination, but a follow-up review by his team returned the same result. “We’ve been back and forth and back and forth with this, but there really was no question,” Slavin said.

Pearson stands behind its findings. “Our conclusion that these intervention programs meet the strong evidence criteria … is based on gold-standard research studies — conducted by an independent third-party evaluator — that found both SuccessMaker Reading and Math produced statistically significant and positive effects on student outcomes,” the company said in a statement.

Yet, SuccessMaker also hasn’t fared well when judged by another evaluator, the federally funded and run What Works Clearinghouse.

“When examining the data, there is a clear indication that SuccessMaker Reading is not improving student growth scores for students.”

Jefferson County Public Schools evaluation

Launched in 2002, the What Works Clearinghouse assesses the quality of research about education products and programs. It first did a review of SuccessMaker Reading in 2009 and updated it in 2015, ultimately concluding that the only Pearson study of the program that met What Works’ threshold for research design showed that the program has “no discernible effects” on fifth and seventh graders’ reading comprehension or fluency. (The Pearson study included positive findings for third graders that the What Works did not evaluate.)

The Hechinger review of dozens of companies identified seven instances of companies giving themselves a better ESSA rating than Slavin’s site and four examples of companies claiming to have research-based evidence of their effectiveness when What Works said they did not. Two other companies tied their products to What Works’ high standards without noting that the organization had not endorsed their research.

Stack, the former Office of Management and Budget employee, said that despite almost 20 years of government attempts to focus on education research, “we’re still in a place where there isn’t a ton of great evidence about what works in education technology. There’s more evidence of what doesn’t work.” In fact, out of 10,654 studies included in the What Works Clearinghouse in mid-April, only 188 — less than 2 percent — concluded that a product had strong or moderate evidence of effectiveness.

Part of the problem is that good ed tech research is difficult to do and takes a lot of time in a quickly moving landscape. Companies need to convince districts to participate. Then they have to provide them with enough support to make sure a product is used correctly, but not so much that they unduly influence the final results. They must also find (and often pay) good researchers to conduct a study.

Related: Don’t ask which ed tech products work, ask why they work

When results make a company’s product look good, there’s little incentive to question them, said Ryan Baker, an associate professor at the University of Pennsylvania. “A lot of these companies, it’s a matter of life or death if they get some evidence up on their page,” he said. “No one is trying to be deceitful. [They’re] all kind of out of their depth and all trying to do it cheaply and quickly.”

Many educators have begun to consider it their responsibility to dig deeper than the research claims companies make. In Jefferson County Public Schools, for instance, administrators have changed their approach to picking education software, in part because of pressure from state and federal agencies.

“There is a private-sector motive to market and falsely advertise benefits of technology, and it’s really critical that we have better information to make decisions on what our technology investments are.”

Kathryn Stack, former official in the White House Office of Management and Budget

Felicia Cumings Smith, the assistant superintendent for academic services at JCPS, joined the district two years ago, after working in the state department of education and at the Bill & Melinda Gates Foundation. (The Gates Foundation is one of the many funders of The Hechinger Report.) Throughout her career she has pushed school and district officials to be smart consumers in the market for education software and technologies. At JCPS, she said things have changed since the district stopped using SuccessMaker. The current practice is to find products that have a proven track record of success with similar student populations.

“People were just selecting programs that didn’t match the children that were sitting in front of them or have any evidence that it would work for the children sitting in front of them,” Cumings Smith said.

JCPS, one of the 35 largest districts in the country, is fortunate to have an internal evaluation department to monitor the effectiveness of products it adopts. Many districts can’t afford that. And even in JCPS, the programs that individual principals choose to bring into their schools get little follow-up evaluation.

A handful of organizations have begun to help schools conduct their own research. Project Evident, Results for America and the Proving Ground all support efforts by schools and districts to study the impact of a given product on their own students’ performance. The ASSISTments E-TRIALS project lets teachers perform independent studies in their classrooms. This practice helps educators better understand if products that seem to work elsewhere are working in their own schools. But these efforts reach relatively few schools nationwide.

Some people say educators shouldn’t shoulder the full responsibility of figuring out what works; that vendors should be held to higher standards of truthfulness in the claims they make about their products.

The Food and Drug Administration, after all, sets limits to what drug and supplement manufacturers can say about their products. So far, ed tech companies have no such watchdog.

“People were just selecting programs that didn’t match the children that were sitting in front of them or have any evidence that it would work for the children sitting in front of them.”

Felicia Cumings Smith, assistant superintendent of Jefferson County Public Schools, on how schools used to choose ed tech

Sudden school closures have only complicated the problem; as educators rushed to find online options for students at home. “This is such a crisis that people are, quite understandably, throwing into the gap whatever they have available and feel comfortable using,” Slavin said.

Still, Slavin sees an opportunity in the chaos. Educators can use the next several months to examine ed tech research and be ready to use proven strategies — whether they’re education software or not —when schools do reopen, ultimately making them savvier consumers. “Students will catch up, and schools will have a taste of what proven programs can do for them,” he said. “At least, that is my hope.”

This story about education software was produced by The Hechinger Report, a nonprofit, independent news organization focused on inequality and innovation in education. Sign up for the Hechinger newsletter.

I have been using SchoolsPLP (ResponsiveEd) for the past year as the only HS Math teacher at my school. It has many mistakes in teaching errors, curriculum misalignment, as well as errors in the questions/answers.

How can we insist that the publishing company that produces this particular online curriculum be held accountable for editing prior to collecting money from unsuspecting schools?